What if speed and cost reduction weren’t trade-offs?

There’s a chart every technology leader knows by heart. It lives on consultant slides and vendor pitches. It shows the Iron Triangle: Scope, Time, and Cost. The rule is simple: Pick two.

Want it fast? It’ll cost more. Want it cheap? It’ll take longer.

We’ve accepted this for decades. It’s the “physics” of software delivery. Except… I don’t think those laws apply anymore.

The Reality of the “Delivery Tax”

I’ve spent 15 years building data platforms for retailers - G-Star, Moss Bros, WHSmith. The pattern is always the same. We focus on “coding,” but coding is rarely the bottleneck. The real time-sink is the Delivery Tax:

- Understanding: Days of meetings to clarify business context and requirements.

- Research: Weeks spent identifying patterns, anti-patterns, and framework specifics.

- Architecture: Expensive senior time spent making and documenting trade-off decisions.

- Validation: The “two steps forward, one step back” of testing and debugging.

- Documentation: The rushed READMEs and runbooks usually left for the final hour.

This is why a typical enterprise data platform requires a team of 5 specialists working for 6-9 months. That is the industry benchmark.

Then, in late 2024, the relationship between these phases shifted.

The Numbers That Shouldn’t Exist

I am currently two weeks into a 12-week project: an enterprise Databricks Lakehouse on Azure, involving five data source integrations and ERP connectivity.

When I compared my current progress against traditional benchmarks, the math stopped making sense.

The Project-Level View (Efficiency)

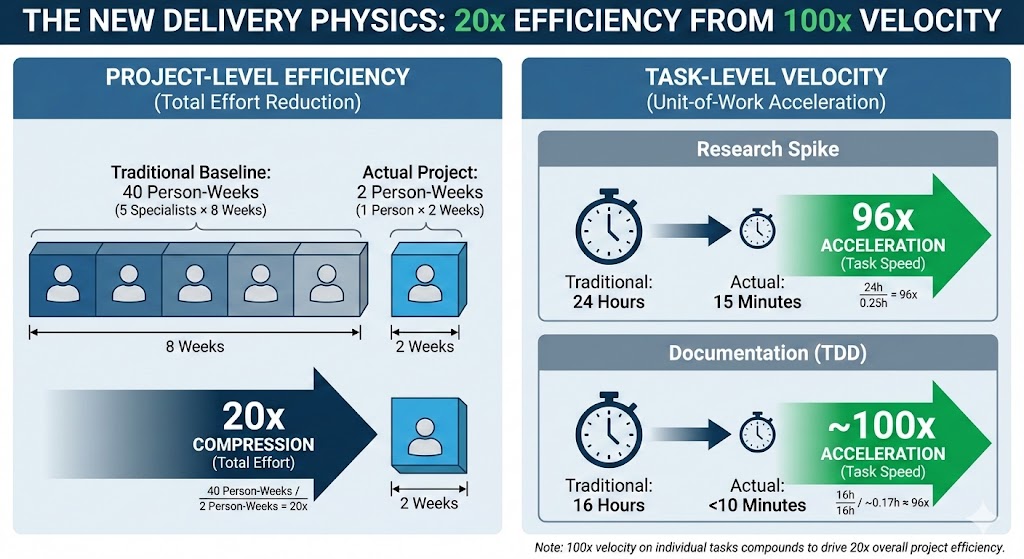

In terms of total human effort, the reduction is massive. We are seeing a 20x compression in the total resources required to hit a milestone.

| Metric | Traditional Baseline | Actual (Current Project) | Multiplier |

|---|---|---|---|

| Team Size | 5 Specialists | 1 Person | 5x |

| Duration | 8 Weeks (to current stage) | 2 Weeks | 4x |

| Total Effort | 40 Person-Weeks | 2 Person-Weeks | 20x |

The Task-Level View (Velocity)

While the project-level gain is 20x, the unit-of-work velocity - the “physics” of an individual task - is consistently hitting 100x+ acceleration. This is where the game truly changes.

- The 100x Research Spike: A complex integration pattern that previously required 3 days (24 hours) of senior research now takes 15 minutes of structured, context-aware synthesis.

- The 100x Documentation Loop: Technical design docs that used to take 2 days (16 hours) to draft now “self-generate” from the code and architectural context in under 10 minutes.

It’s the Wrong Question

When I share these numbers, the first question is always: “What AI tools are you using?”

That is the wrong question. If AI just made “typing code” faster, we’d see a 2x improvement at best. The acceleration isn’t coming from a better IDE; it’s coming from a change in the Entire Delivery Lifecycle.

The speed comes from knowing what to ask for. The quality comes from recognising when the answer is wrong.

A junior team with “AI tools” is still a junior team grinding through documentation for the first time. But a person who has solved these problems for 15 years, working within a workflow that amplifies that experience? That is a different proposition entirely.

The 2026 Reality

I’m not suggesting AI replaces engineering teams. But I am suggesting that the assumptions baked into every SOW, every project plan, and every hiring decision are now based on constraints that no longer exist.

- What happens to your roadmap when a 6-month initiative becomes 6 weeks?

- What happens to “Build vs. Buy” when the cost of building has dropped by 95%?

The Iron Triangle assumed that expertise was scarce and context was expensive to transfer.

I don’t think the Iron Triangle survives 2026.

The Proving Ground

I’m documenting this entire project week-by-week. No NDAs on the methodology - just on the client specifics. If you’re a CTO wondering what is vendor theatre versus what is real, I’ll share what I’m learning.

If you think these numbers are impossible, I’d genuinely like to hear why. I’m still making sense of this new physics myself.

James Weeks is the founder of Code Velocity Labs. He builds data platforms for retailers and tracks what’s actually possible when AI-native delivery methods replace traditional approaches. Connect on LinkedIn or email to compare notes.